14 Laravel services migrated from a single VM to ECS with elastic autoscaling and resilient data layers

We containerized 14 Laravel/PHP services, introduced a shared base image to cut image build time from ~10 minutes to ~3 minutes, and moved Redis to ElastiCache, MariaDB to Amazon RDS, and RabbitMQ to a tuned EC2 instance.

The client ran everything (14 Laravel services, Redis, RabbitMQ) on one VM, creating a single point of failure and preventing independent scaling. We rebuilt the platform around containers and Amazon ECS on EC2, with per-service scaling, managed data stores, and environment isolation across separate VPCs for dev and prod. Build time dropped by ~70% via a custom base image; CI/CD now ships every service via ECR to ECS with automated deploy checks. Costs now track demand: minimal footprint at low usage, and higher throughput at peak with commensurate spend—the objective was elasticity, safety, and throughput, not flat-cost reduction.

Laravel services containerized

14

Image build time reduction

70%

Autoscaling layers

2

- The team needed fault isolation and elastic capacity without overprovisioning or risking a full outage from a single VM.

Single-VM blast radius

Single-VM blast radius

Redis, RabbitMQ, and application code co-located on one machine meant any host failure would take down queues, cache, and 14 services simultaneously.

Uneven traffic and php-fpm tuning per service

Uneven traffic and php-fpm tuning per service

Services saw very different loads, but php-fpm previously ran as a shared pool. In containers, each service needed precise ECS CPU/memory and php-fpm (memory_limit, pm.max_children, etc.) alignment to prevent contention and headroom waste.

No native ECS deployment callbacks

No native ECS deployment callbacks

ECS lacks first-class hooks to confirm rollout success/failure, complicating CI/CD visibility and incident response during deploys.

We built an ECS-on-EC2 platform with Terraform, ECR, and managed data services to achieve per-service scaling, resilience, and CI/CD you can trust under load.

Containerization with a shared base image

Each Laravel service was dockerized and pushed to its own ECR repo (by environment). A custom base image embedded common dependencies, cutting average image build from ~10 minutes to ~3 minutes and standardizing runtimes across services.

Resilient data and messaging layers

Redis moved to AWS ElastiCache for HA and reduced ops. MariaDB migrated to Amazon RDS for backups/monitoring/scale. RabbitMQ was deployed on a dedicated EC2 instance (Amazon MQ’s sizing/pricing steps didn’t fit the workload) balancing cost and performance.

Orchestration on Amazon ECS (EC2 launch type) with dual autoscaling

We selected ECS on EC2 for tighter compute control. EC2 Auto Scaling Groups scale cluster nodes; ECS Service Auto Scaling scales tasks per service. This matches uneven traffic profiles and removes VM-level contention.

Hitting the limits of a single VM but can’t afford dropped requests during spikes?

Ready to start your cloud journey with us?

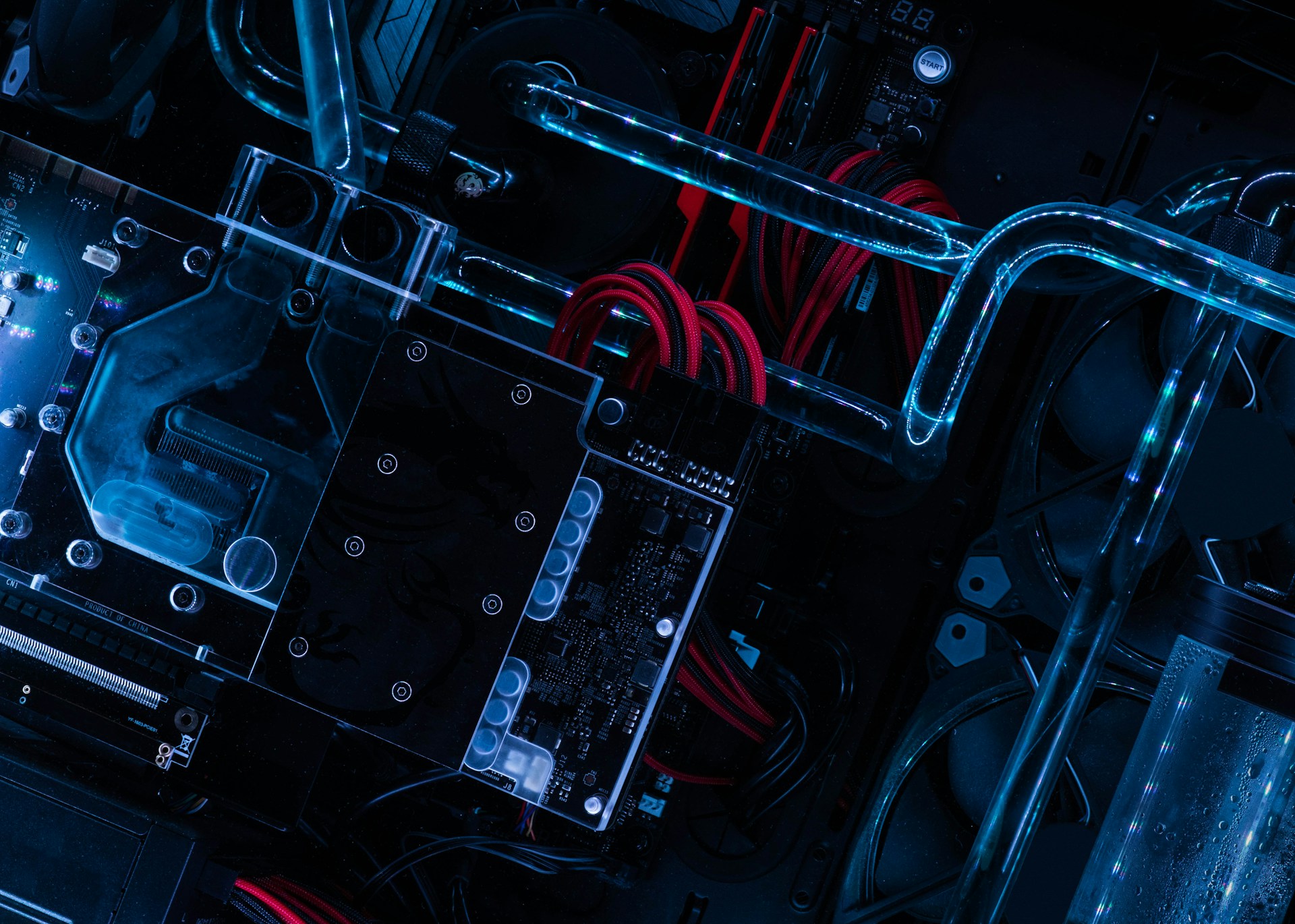

Technologies we used

• Docker (custom base image for PHP/Laravel services)

• Amazon ECS on EC2 (task definitions, services)

• EC2 Auto Scaling Groups; ECS Service Auto Scaling

• Amazon ECR (per-service, per-environment repositories)

• AWS ElastiCache for Redis

• Amazon RDS for MariaDB

• RabbitMQ on EC2 (tuned instance, non-Amazon MQ by design)

• Terraform (VPCs, ECS/ASG, RDS, ElastiCache, ECR)

• Laravel + Laravel Horizon; php-fpm tuning (memory_limit, pm.max_children)

• CI/CD pipelines with custom ECS deploy status notifier